Semantic Understanding on OCR'd Literature Text

Today, the accessibility of ever-growing digitized textual collections in digital libraries (DL) and the rapid development of natural language processing (NLP) technologies have enabled a variety of research communities, especially for scholars in digital humanities and cultural analytics, to initiate computational research in the hope of gaining new insights into historical, cultural, and social problems. Despite amazing efforts in opening new windows to knowledge, the digitization of text by machine scanning and optical character recognition (OCR) could inevitably involve unique OCR noise in such digitized text, which challenges the performance of regular NLP tools that are built on born-digital corpora. Moreover, the “black-box” NLP further exacerbates the problem of understanding OCR’d text, causing the difficulties to validate downstream outcomes.

Hoping to shed lights on the aforementioned issues, I propose this project as my dissertation research, where I specifically focus on exploring the robustness of word embedding techniques for encoding the semantic information of OCR’d text in DL collections. The practical impact of this project includes:

- Digital Librarians -- Finding optimal embedding tools to encode the information of OCR’d text in DL collections, which benefits the representation and organization of information in DL across unit (e.g.,page, chapter), genre (e.g., newspapers, fictions), and perhaps platform (e.g., Project Gutenberg, HathiTrust).

- NLP Researchers and Developers -- Being aware of potential limitations of existing embedding algorithms on machine-digitized text, which is necessary to improve the robustness of word embedding to process OCR’d noisy text.

- DL Scholarly Users (e.g., digital humanists) -- Faciliatating reliable computational analysis on digitized library collections by NLP tools.

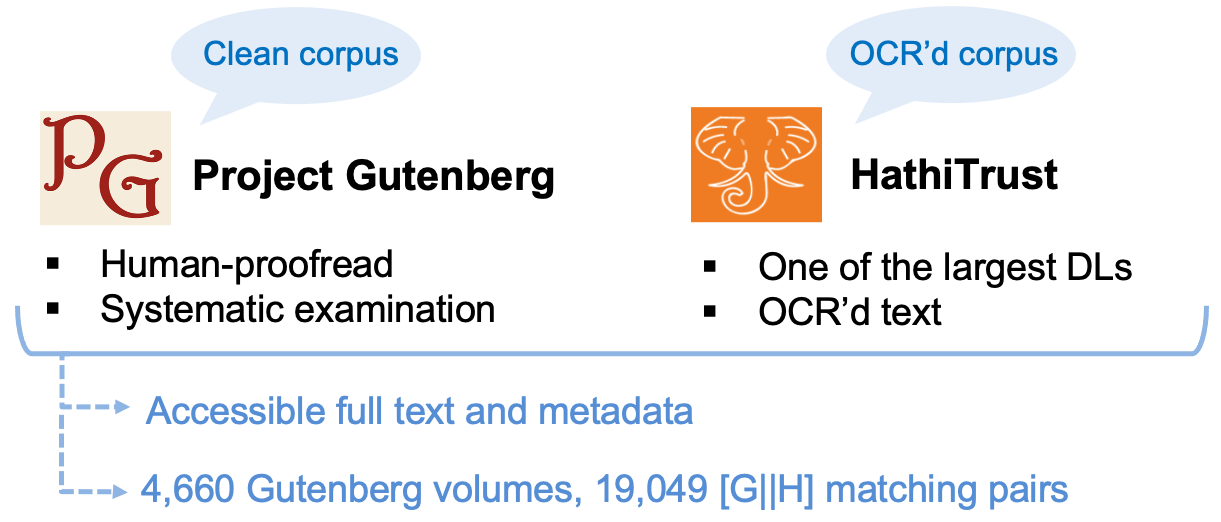

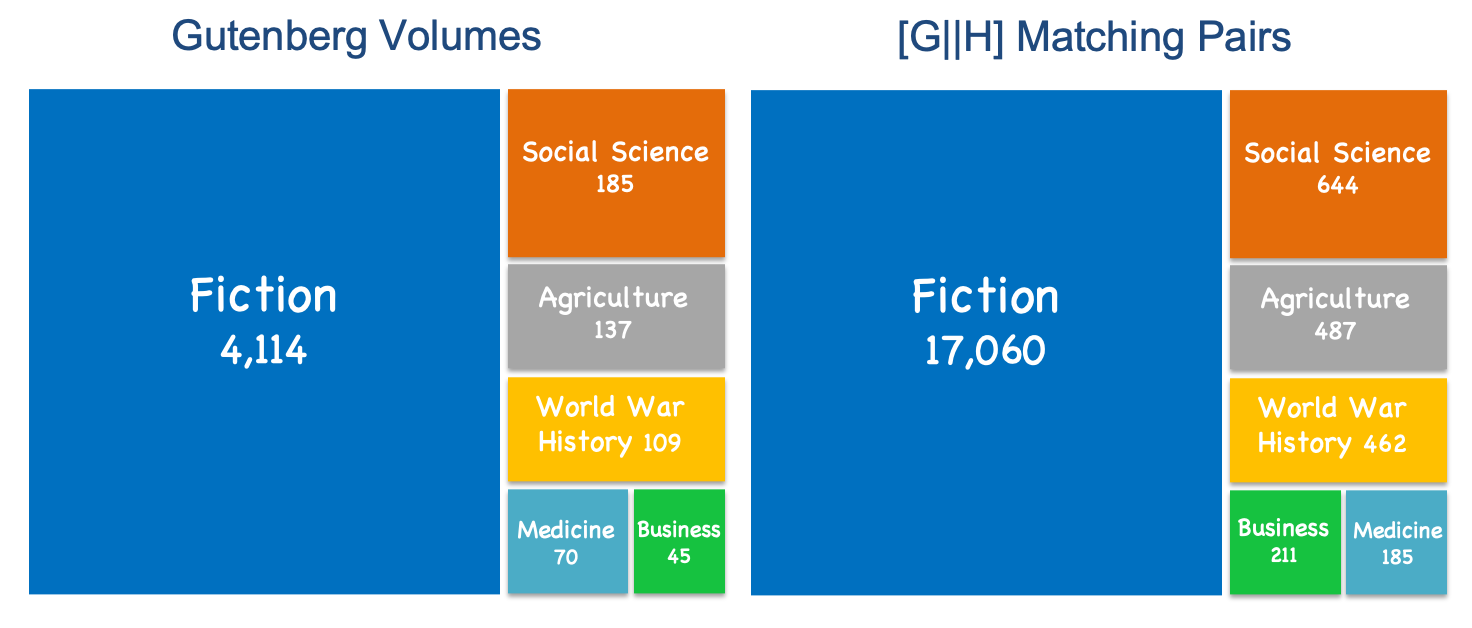

To conduct this research, I started with leading the construction of the Gutenberg-HathiTrust parallel corpus, which contains matching pairs of OCR’d (19,049 volumes) and “clean” human-proofread (4,660 volumes) English-language books in 6 domains, publ. 1780-1993.

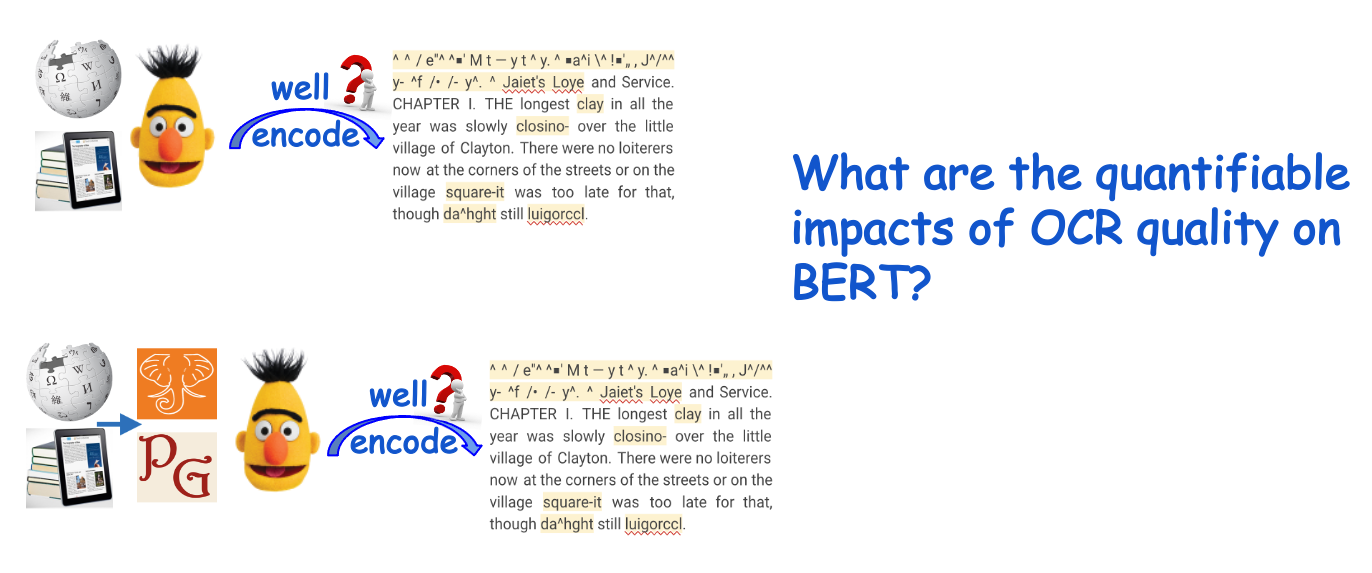

With this parallel corpus, I led the investigation of BERT embedding resilience to OCR noise toward a use case of classifying book excerpts into subject domains. I also worked with the team in examining the capacity of the BERT embeddings by an intrinsic retrieval task that leverages these embeddings to retrieve semantically relevant text chunks at different granularities (i.e.,chapter, book, or domain levels).

Why BERT? As an advanced text representation tool, namely, encoding the contextual meaning of words into a vector space, BERT outperforms prior techniques like word2vector in two aspects. First, targeting the encoding of word tokens rather than word types, BERT is good at identifying the correct meaning a homonym within its context. For example, the word bank in the phrase “rivier bank” and “savings bank” will have two different vector representations in BERT. Besides, relying on the transfer learning, BERT is able to get general linguistic knowledge from a massive, high-resource corpus, to serve specialized downstream tasks, which can be low-resource. Because of these practical benefits, NLP practitioners such as DH scholars have been increasingly interested in using this technique for their research on digitized texts. Therefore, I believe that looking into the interaction between OCR’d texts and BERT is a very worthwhile research topic.

I have presented current research outcomes at iConference, JCDL, and CHR in 2021. To achive my goal of promoting reliable semantic undersntanding on digitized textual collections, I know that I still have a long way to go. My next step is to continue working on my dissertation, focusing on BERT and other word embedding tools’ performance on OCR’d text both at different text granularities and for different downstream NLP tasks. If you are interested in this research and would like to have a chat, please feel free to reach out to me!

Last but not the least, I would like to express my sincere appreciation to all my brilliant collaborators (Yuerong Hu, Glen Worthy, Ryan C. Dubnicek, Boris Capitanu, Deren Kudeki, Ted Underwood, and J. Stephen Downie) and my dissertation proposal committee (J. Stephen Downie, Allen Renear, Ted Underwood, Halil Kilicoglu, and Zoe LeBlanc) for their valuable advice and great support to me in this research.